Ever since the inception of Team Foundation Server (TFS), there has been a need to harmonize its usage with ongoing portfolio management efforts by a PMO (Project Management Office) team going on in bigger companies. In the beginning, the only out-of-the-box way of integrating with tools used by PMOs was to use the Microsoft Project integration, which allowed a backlog to be managed as a project file.

Later Microsoft introduced the integration of Project Server with TFS 2010 which enabled true IT portfolio management capabilities by the combined TFS/Project Server product: TFS would give you visibility into the Agile software development piece within a larger application portfolio that included project-driven or waterfall projects as well, and other non-software development IT projects would be managed directly with Project Server.

However the solution has always been complex to maintain, and smaller to mid-sized companies tried to use just TFS to manage their application portfolio, ignoring Project Server altogether. Since TFS was not built with portfolio management as a baseline requirement, adapting it to manage a larger application portfolio requires trade-offs. One of the is that unless you are using some third party integration, you need to use a single team project. If that is possible in your company, then it works really well.

Other limitations:

- All teams have to use the same process template: either Scrum, Agile or CMMI, whereas before the idea was to let the teams decide how to self-organize, as long as they provided some rollup data for the PMO.

- Access to each team backlog has to be managed at the area path level.

- Iteration (Sprint) management becomes more complex if teams are not aligned, with different paths that have to be assigned to a team.

- The user interface becomes unwieldy with many dropdowns having hundreds of repos, builds, releases, etc. This has been minimized by adding the ability to favorite your most used items, which always show on top.

The idea of using a single team project to manage a portfolio of products has been around ever since TFS was introduced, and was made popular by Greg Boer’s post TFS vNext: Configuring your project to have a master backlog and sub-teams, based on Microsoft’s own experience of using TFS.

After 2017, the Azure DevOps team has discontinued the out of the box integration with Project Server. It makes sense as Project Server is, to Azure DevOps, just another third party, and it now integrates as other third parties do: using a connector.

Since 2010, TFS then VSTS, and now Azure Boards have been evolving features which have increased its portfolio management capabilities, such as cross-project queries, including being able to move an item from one team project to another, and dependency management.

However, when going through the Portfolio Management article in Microsoft’s Azure DevOps documentation, most users do not realize an underlying assumption that is not mentioned in the article: you still need everything in a single team project, both the management and execution backlogs. The same assumption is used throughout the documentation, such as the Agile Culture article, or the Scale Agile to Large Teams article.

One option for a company would be to emulate Microsoft’s Agile culture, and have teams create and manage their own Stories or PBIs, as suggested by the Line of Autonomy below. In this way the top level with Epics and Features can reside in a different team project, with Stories or PBIs in each individual team project.

The caveat for doing that is that although the relationship between Features and Stories still exist, the latter ones are not shown on any Boards or Queries in the original Portfolio project. That allows for a true separation of concerns between Management and Teams. Smaller companies might want to manage Stories together though, and therefore this option won’t work.

For companies that want to use separate team projects, and still do portfolio management, the documentation suggests using Delivery Plans. It works as a graphical query of work items, and it is useful when comparing timelines of User Stories and PBIs across teams. It does not however extend a Feature or an Epic to the sum of the iterations of the contained User Stories: it requires both high level items to be assigned to an iteration or sprint, which goes against normal usage of both, where Features and Epics are delivered across many sprints. Notice that the following picture from the documentation has all Features spanning just a single Sprint. In this view the item called “Change initial view” is being moved from Sprint 50 to Sprint 51:

More recently, the introduction of Feature Timelines has fixed this issue as it allows Features to span over several sprints, but it does not work across team projects:

A compromise for these limitations, while they are being worked out by the Azure DevOps product team, is to keep all the work items in a single team project, and have Repos, Builds, Releases and Packages/Artifacts in separate team projects. It is possible link Stories and Tasks to Code commits/Check-ins, Branches, Builds and Releases by referring directly to the work item ID. You will however have to use the same process template as mentioned before.

Having now understood the limitations of implementing Portfolio Management out of the box, you can decide between one of the strategies above or to use some 3rd party extension to enable it.

Most don’t realize it but the current trend is the third time that “cloud computing” has achieved preeminence, having failed twice before.

Cloud computing has been with us much longer than most of us are aware – unless you are a historian, or has lived through it. I can only claim to have witnessed the latter part, the last ten years. So instead of detailed history, let’s talk about the story of cloud computing from overall broad strokes and get some insight of why, this time is it here to stay. We can think of three overlapping waves that led to the current state:

The First Wave of Cloud Computing – the 60’s

I recall in the early nineties, just when I was starting to use brand new Windows NT at my first job, DEC had gone broke, and Unix (not Linux) was THE standard for distributed computing, I came across an odd, old book from 1966 that was being discarded by the library: The Challenge of the Computer Utility by Douglas F. Parkhill.

I hesitated taking it home; it did not fit anything I know about computing. However this short book was fascinating. It talked about computing power as similar to electricity or water, something that could be shared and channeled to be used by millions, and the economics behind it. I kept the book, feeling it that the technology behind was already three decades old, but that the economics part of it seemed still relevant.

Only in the early 2010’s, with the coming of Cloud Computing was that I finally became aware of the importance of that book as I got more and more into a new thing called Azure and Amazon clouds.

A funny fact is that the book itself has a short history of computing in the first four chapters (chapter four: “Early Computing Utilities”), and only on chapter five goes on to latest state of the art on Time Sharing Systems. Chapter Six is on the economics aspect of computing utilities, which pretty much is visionary in anticipating all that is going on now with AWS and Azure evolving pricing models.

Despite the optimism of Parkhill and others, the concept of a public computing utility was still at its infancy considering the technical complexity of the problem. It continued to evolve as private time-sharing systems, with DEC/ UNIX systems accelerating in the 70’s and becoming mainstream in the 80’s.

The Second Wave of Cloud Computing – the 90’s

The second overlapping wave came by capitalizing on technical advancements as a foundation to evolve the economics of utility computing. Perot, with EDS, was one of the early proponents about selling computer time, not hardware to customers. He took the lead in the 60’s, and by the early 90’s the outsourcing industry had become a complex services segment of IT, with hundreds of companies that would not only sell computer time but also take over your IT services as a whole.

It became a mantra of the day to focus on core business and outsource all non-core business functions to these service bureaus, as they sometimes had been called since early days. That meant sharing not only computing infrastructure with other businesses, but also services as well.

Most of these services were initially mainframe based, such as IBM’s or Unisys’. IBM itself was a big provider of this kind of integrated IT service, and was not sitting on its laurels with just mainframe shared services, having branched to embrace the PC revolution it helped start. It was Gerstner’s major success and turned the company around.

All this focus on core services might have been a consequence of an initial well intended reengineering idea, but it ended up being pricey due to pretty much lock-in and lack of competition.

The lowering of computing hardware prices brought by ruthless commoditization by Compaq and Dell was so much in full swing, that circa ‘98 I recall working with company after company in migrations from service bureaus to in-house client server systems. Still, it would seem in around 1999 that the “computing utility” world was for IBM and HP to share, and the typical view of the world was as a customer plugged into the Matrix mainframe.

In the meantime, the technology continued to evolve with rapid progresses in virtualization (as shown by the stellar rise of VMware as the de facto standard) in late 90’s, clustering, and distributed computing (culminating with the Internet).

Most companies opted for in-house farms of inexpensive servers with virtualization software such as VMWare. The advent of Linux replacing all things UNIX, and the Xen hypervisor set the stage for the displacement of all previous contenders (IBM, HP and Sun) for the arrival of AWS.

The third wave – the 10’s

How a company that had started selling books online ten years before had become the major proponent of selling computing utility (now being called everywhere “public Cloud” services) is a fascinating topic in itself. However it was not without precedent: the infamous Enron company had already in its target “to trade bandwidth like it traded oil, gas, electricity, etc”. Amazon pretty much made use of its already existing ability to sell anything, to sell one more thing: excess capacity of its data centers.

It took an amazing turn-around effort from Microsoft in hiring Ray Ozzie, who championed the cloud and what came to be Azure, and realigning itself for growth. Today you pretty much talk about three clouds: two major contenders with AWS as the giant, Azure coming fast, and Google Cloud a distant third. IBM, HP, Dell/EMC are now the dwarfs:

All this effort has paid off to Amazon and Microsoft Why is this third time a charm? It is because by 2008, all technological components were in place to provide reliable public computing utility services. From Pfister’s book (originally on clusters, but those are the technical foundations of high availability), here are the standard technical reasons why you want it: performance, availability, price/performance ratio, ability to grow incrementally, scaling.

According to Pfister, there is also the “scavenging of unused computing cycles” as a reason to why you want computing clusters, and therefore, the Cloud. On top of the technical platform, Amazon also had the logistics and economics patterns figured out, and now so do the other providers.

The Cloud also summarizes and commoditizes all the best implementation patterns around, from software design ones, to architectural, and its own cloud computing patterns. It is a massive collation and redistribution of knowledge, and in this foundation relies its stability.

Public computing utilities are here to stay. Cloud Computing has now finally a chance to fulfill its potential environed at MIT in the early 60’s.

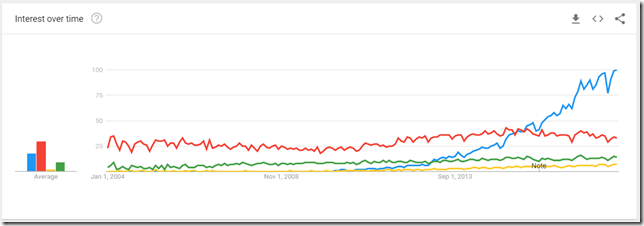

DevOps has a great momentum in the industry at this point, as can be seen by the growth of searches of topics related to it. Different from “ALM” and “Agile”, which are very generic terms which makes it difficult to filter out the noise, “DevOps” is unique and allows a more clear cut picture. Let’s take a look at it using Google Trends.

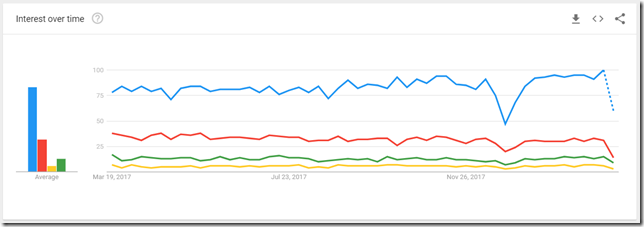

If you look into just the last year, when compared to “Application Lifecycle Management” and other trendy topics in IT, it seems that the curve is pretty much stable:

You have to look at the big picture to understand the magnitude of the current momentum DevOps has:

On the conservative side, we can gather a couple of things from those numbers:

- Application Lifecycle Management has always been a relatively popular topic, but never caught up as a really growing trend;

- Continuous Delivery and DevOps came from no presence to a steady growth for the former, and an explosive growth for the latter.

- The idea of Continuous Integration has existed for a while until it was codified in the well known Martin Fowler article.

On the outrageously optimistic side, for those of us who worked under that umbrella, and thought it was big, the prospects of DevOps dwarf all trends that have come before. You need only say: WOW!

Will the DevOps buzz quickly stabilize into an S-curve, plateauing in the near future? There is no doubt it will flatten out. However, given this fantastic momentum I think it will last several years until we start to see the next trend.

Working with cutting edge DevOps is one of the reasons I joined Nebbia, and hopefully I can contribute to our DevOps team as much as am getting from them.

It is common to talk about “last mile” adoption problems for both Agile (usually traditional QA teams, in other cases, traditional business teams) and now DevOps (again QA and Data teams as the typical bottlenecks).

I have recently been working with the Microsoft Cognitive Toolkit (CTK), and asked myself the same question on how to create a continuous delivery pipeline for AI applications. If AI implementations grow as much as Gartner is predicting, the deploying AI apps have a good change of become the next “last mile” problem.

Researching on this, came across an article on DevOps for Data Science and AI: Team Data Science Process for Developer Operations, with the description “This article explores the Developer Operations (DevOps) functions that are specific to an Advanced Analytics and Cognitive Services solution implementation.”

However, instead of the (more recently) clear-cut how-to documentation that Microsoft has been providing as part of its VSTS products, this article seemed more like a list of building blocks that need to be distilled into at least a nice simple sample implementation.

Investigating the Azure Data Science Virtual Machine seems the next step to better understand how everything is connected. Among other things it has the CTK installed, which is what is attracting my attention in this area. As a production solution though, it is not the final answer, so to define a process to deliver from this machine as a development environment to a production business server, a lot has still to be done.

I will continue experimenting and will let you guys know next. My ultimate goal would be to use some version of Prolog.

I just returned from Mexico where I worked for two weeks with one of the country’s biggest companies, and delivered the first Microsoft DevOps FastTrack there (all in Spanish ;-)). It was a fantastic experience to work with such a tight knit team (about 16 people).

For obvious reasons I can’t share too many details about the engagement. I can however share one key fact that pretty much won over their hearts and minds on adopting VSTS not only for this project, but also for the rest of the company as well. Here is the list of tools we used:

Programming languages:

- Jersey with Java 8 (JDK 1.8)

- JavaScript with Angular 1.X

- HTML 5, CSS3 with a Bootstrap template

Programming tools:

- NetBeans 8.2

- Visual Studio Code 1.19

Dependency Managers:

Testing frameworks:

- JUnit and RestAssured for Java

- Selenium for frontend

HTTP Servers:

- Tomcat 8.54

- Apache httpd 2

Database:

- PostgreSQL 9.6, client PgAdmin 9.3

- Flyway 5.0.5

Version Control:

As you can see, it was all done using either OSS or non-Microsoft tools. The only Microsoft tool (other than VSCode) was VSTS for project Management and DevOps, and also for overall orchestration of the whole process.

So we started with a skeptical team thinking Microsoft (me on their behalf) is going to try and impose a Microsoft tool stack, and ended up with an elated team using their current existing toolset being coordinated with VSTS. For them this was a very powerful statement from Microsoft about their support for OSS and tool diversity: we can work with all kinds of toolsets.

Having worked with Microsoft during a time where it was heavily focused on just pushing its own products, the current general attitude of respect, openness and inclusiveness I am getting from all parts of Microsoft is a breath of fresh air, and customers are noticing (for a non-code example, see for instance this article).

I have been selected to present at Visual Studio Live! this year. These are the two topics I will presenting on:

Many "scaling agile" efforts have failed for lack of focus on delivering working software on a regular basis. They have instead just been a refactoring of processes to gather requirements. It is easy to ignore tooling, development, and down–to–the metal DevOps practices when talking about scaling agile. However, these can be a boon or a bane to achieve working software – meaning tested software – and as with any process implementation, agile at scale is no different.

You will learn:

- How most agile scaling efforts focus just on restructuring backlogs and leave developers behind

- Learn how to identify DevOps and tooling bottlenecks, and align the implementation of agile at scale with the Agile Manifesto – working software over comprehensive documentation

- Rules of thumb derived from real world experience on doing DevOps when scaling agile

and the second talk will be

Knowledge management is often touted as one of the major applications of SharePoint and similar tools that made them widely successful. Oddly, the shoemaker's children go barefoot. Companies lose millions every year because of their inability to actively manage the intellectual capital invested in the applications they develop. VSTS lets you take control of this situation by using it as your team's communication hub and expanding it with practices and strategies to truly manage the knowledge supporting your applications.

You will learn:

- The hidden risk of not managing the intellectual capital behind your code

- How to use VSTS as a communications hub for your team and a knowledge repository for your application, plus usage strategies to maximize long term value

- Fundamental strategies, best practices, and rules of thumb for preserving and managing a development team's knowledge of an application, minimizing attrition risk

The conference is from April 30th to May 4th at the Hyatt Regency. I don’t yet have the schedule of when I will be presenting, so I will update this post when I know it. You can register at https://vslive.com/events/austin-2018/home.aspx.

See you there!

There a plenty of DevOps meetups in Austin (amongst them the Agile Austin DevOps SIG), but ever since I worked with other fellow TFS users in town in leading the local TFS Users Group, later renamed to Austin ALM User group, there was always the question on whether it should actually be a DevOps group.

The initial resistance came from the misconception that DevOps was just the end part where software meets production. All the way we talked about merging our efforts with the .NET user group.

So, after a hiatus Austin finally has had since September this year the Microsoft DevOps User Group (MDOUG), spearheaded by Jeff Palermo from Clear Measure, with my-self and others as co-organizers.

Here is the group mission:

This group is for software professionals working on the Microsoft platform who wish to explore, implement, and learn DevOps. This group embraces cloud and as such will bring in the best presenters for each topic while live streaming all content. Additionally, this meetup serves as the hub location, providing an example of other MDOUG live-stream locations in major cities around the U.S. This group recognizes the technical practices included in the DevOps Handbook by Gene Kim, Jez Humble, Patrick Bebois, and John Willis. We encourage members to read this book. From a technology perspective, we seek to implement these practices on Visual Studio-based software, leveraging VSTS, and running on Azure or on Windows servers anywhere.

This is a worthy successor to the original Austin ALM/TFS User group, and I recommend all to become a member. Amongst other presenters we have had Brian Harry himself speak to the group, follow by Damian Brady, both from Microsoft. And now I am recommending that we have Arun Chandrasekhar on DevOps with Azure and OSS.

See you there!

As MVPs, we participate of meetings with Microsoft to provide feedback or to learn about some upcoming new product delivery. This is one of the finest opportunities of being an MVP: to be at the cutting edge of Microsoft technologies.

In October there was an internal MVP meeting with Microsoft on DevOps with Azure. Without going into the specifics, which are under NDA, I want to bring that this one caught me by surprise. When I got the invite, I only looked at the meeting title “Azure DevOps OSS + Q&A with Arun Chandrasekhar”, and took for granted it was about OSS VSTS integrations with Azure: nothing new for me as I have been doing this for while.

However I was blown away by Arun’s presentation: it was about how to do DevOps with OSS tooling, especially Jenkins and Terraform. At my previous job I had worked extensively with Jenkins, including being able to be one of the architects of a build infrastructure that ended up being used by a couple thousand developers.

What I was not aware though, and Arun’s presentation helped me correct that gap, was that there is an extensive set of existing integrations with Jenkins for Azure, including Azure VM Agents, and an Azure-ready VM for Jenkins among others:

To see a couple of those as demos, I recommend watching the two videos from Arun with Donovan Brown:

And the video from Jenkins World 2017 presentation: https://www.youtube.com/watch?v=buQNF1sekq8

There is an entire world of possibilities to be explored, such as Azure DevOps Solution Architectures that are based on Jenkins and OSS:

Given my previous interest and work with Jenkins I will be learning a lot after delving into those references. In conclusion: it’s always good when something forces you to step out of your comfort zone, and meeting Arun has definitely been a great opportunity.

From the last few posts you might have noticed that I have been following up on the DevOps FastTrack offering, and the training that was provided by Microsoft on September, and how valuable that has been for me in understanding how a cutting-edge DevOps practice can be. The VSTS team in that regard is as cutting edge as Facebook’s, Google’s or Netflix. They are not bound to adopting practices such as SAFe (that was a question asked in one of the talks); rather they are defining their own because they are at a place where they are the pioneers (not the individual practices, but their use at scale). They are operating at the Ri level, they are transcending what is currently known.

It was a stated purpose that we as MVPs and Microsoft partners should share these presentations with our customers. I started doing that with every engagement since then, always starting the summary one made by Lori Lamkin.

Now the great surprise: Sam Guckenheimer has worked with the team that presented in extracting any NDA references, and has now shared the whole set of talks at DevOps at Microsoft. This is a treasure trove on how to to do DevOps with VSTS and beyond. I am organizing sessions at our company (Nebbia) so we can watch it together and use it as inspiration for next steps on our own DevOps journey.

Thank you very much for attending! It was one of the biggest crowds ever, considering our small venue (we had 30+ people in the room, and 14 in the waiting list). Next time we might need to have a bigger room, and Leland and I are considering other options depending on the interest on future presentations.

Thank you very much for attending! It was one of the biggest crowds ever, considering our small venue (we had 30+ people in the room, and 14 in the waiting list). Next time we might need to have a bigger room, and Leland and I are considering other options depending on the interest on future presentations.

Microsoft Agile Transformation and Planning.pdf (1.1MB)

I also just learned from Aaron Bjork that he has created a clean presentation of this content (there were a lot of down-the-rabbit-hole discussions at the Fast Track training) and posted it in Youtube.

My presentation of course was based on my own experience at Microsoft VSTS in 2007, whereas Aaron joined the leadership team later, so he actually saw the pain at a critical point, whereas I had just witnessed when it was starting to build. Of course you can’t compare listening directly from the source. However as some of you pointed out, the historical perspective was just as valuable, and thank you for your kind remarks :-). That said, I strongly recommend watching Aaron’s presentation as well.