Please join us on August 28th for another Austin ALM User group meeting.

Presentation: TFS 2015 Panel - New Features and Installation/Upgrade Experiences

Join us on 28th of August to review the new features of TFS 2015, share your installation/upgrades experiences and talk about any topics that would help you get ready for an upgrade.

Speakers

The Austin ALM User Group Leadership will compose the panel, plus a couple of Microsoft ALM Specialists.

Location:

Microsoft Technology Center: Austin

Quarry Oaks Atrium

Bldg. 2 - 2nd Fl.

10900 S. Stonelake Blvd.

Austin, TX 78759

To ensure a seat and lunch, please register at: https://www.eventbrite.com/e/tfs-2015-panel-new-features-and-installationupgrade-experiences-tickets-18159555720

Agenda:

11:30 - Noon:

Noon - 1PM: Presentation and Discussion

I have been working with the Jenkins build management system since last July and helping our internal Java, iOS and some .NET development teams to implement Continuous Integration. The objective is to also coordinate their builds with SonarQube so we can get cross-collection visibility to status in some projects that span multiple teams.

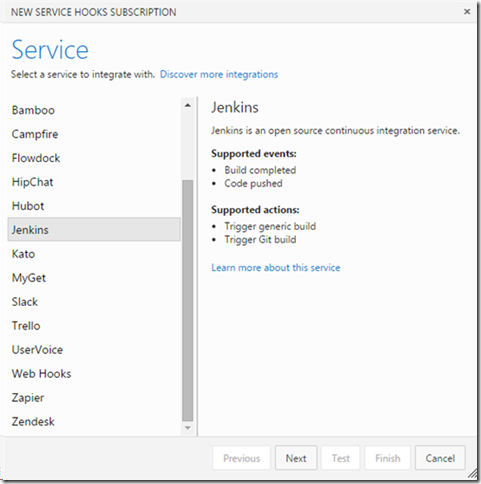

Microsoft has recently released the ability to have Jenkins builds in VSO through service hooks:

If we had this for TFS on premises a year ago we might have followed a different route for our Jenkins/SonarQube implementation, as it is the composite report of all our application builds and deployments (through pipeline statuses) that we are mostly interested in.

For my own work in the cloud I have been using the new fantastically flexible new Build.vNext system. And that composite Build/Release report: you get it almost effortless if using VSO/Azure, in contrast with our current solution which is manually intensive.

Let’s see how our work evolves. I will be looking at this again, specially if it makes into TFS on premises in the near future.

ScrummerFall, WaterScrum, WaterScrumFall: over the years, and as recently as last month, I have seen many referring to these Scrum "aberrations" as if being the same. Here is it for the record, what each one means:

- ScrummerFall: "embedding Waterfall inside of Scrum. This often manifests in what I call the One-Two-One pattern: one week of design, two weeks of coding, one week of test and integration. I've yet to see a team that was long term successful with such a system, especially if they are strongly rooted in historical Waterfall." Aaron Erickson calls it the World's Worst Software Development Methodology. But in my opinion it is so because it is unethically deceptive: it promises renewal, but built on top of a complete mirage of Agile adoption. Picture it this way: it is as if you are thirsty for a fresh flow of Agile ideas in a "Waterfall" desert, and as you get closer it disappears as you slams your face in the sand.

- WaterScrum: "Co-dependent Waterfall and Scrum projects. Similar to trying to play (American) football and soccer on the same field at the same time, requiring extra levels of coordination and timing." That is, you are trying at the same time to abide by Scrum at the team level, and Waterfall at the corporate level.

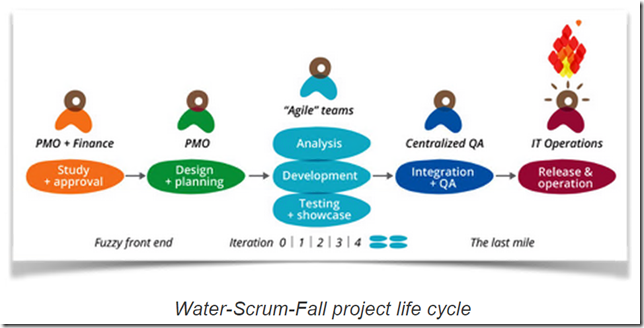

- WaterScrumFall: "1) Water – Defines the upfront project planning process that typically happens between IT and the business. 2) Scrum – An iterative and adaptive approach to achieving the overall plan that was first laid out in the 'Water' stage. 3) Fall – A controlled, infrequent production release cycle that is governed by organizational policy and infrastructure limitations."

ScrummerFall and WaterScrum, are in reallity team dysfunctions caused by lack of experience in Agile. They can be fixed at the team level by a good Scrum Master or Agile Coach who can guide the team towards optimized, clean Agile practices.

On the WaterScrumFall, Manoj Sanhotra found some silver-lining by using it for incremental adoption of Agile practices, and even proposed a way to take it to the next level. The picture below is from his post, and it explains graphically what the general idea of WaterScrumFall is:

But as Dave West said, this is still the development processes of most companies, and has in my experience been the most frequent source of friction when adopting Agile. From what I have seen at 100 plus customers, Agile teams tends to end up stifled and suffocated with bureaucracy and controls created by all the hand-offs between the silos. In all my years working with ALM, centralized PMO and QA teams have been both the last mile of Agile adoption everywhere I have been. I however do not agree with Sanjeev Sharma that WaterScrumFall is here to stay.

WaterScrumFall stems from confusing Governance phases with Execution steps. Some have tried to fix this situation at the PMO level by adopting an Agile Portfolio Management strategy, others by adopting a DevOps oriented approach QA and Deployment.

Scale up frameworks such as SAFe, and Enterprise Scrum focus on optimizing from PMO to Operations. The only frameworks I am familiar with that handle this optimization from idea inception are Lean and Nexus, because it is the business itself that has to become Agile, and until that happens, the "throw-it-over-the-wall" handoff attitude will continue to exist among the fiefdoms of modern enterprise.

Paul Barham from Microsoft wrote an article about how Visual Studio Online Supports True Cross-Platform Development. As I had mentioned in a previous blog post, this has been a nice new trend coming from Microsoft as they expand cross-platform capabilities, enhancing the usage of VSO for big enterprise with heterogeneous environments. Notice the list of available service hooks:

The article does a brief summary of the many ways that VSO enables cross-platform development, from using git as the source control format, and thus supporting, for instance, XCode, to allowing different build systems such as Jenkins to enable Continuous Integration to getting it all tied together and traceable using a Team web backlog.

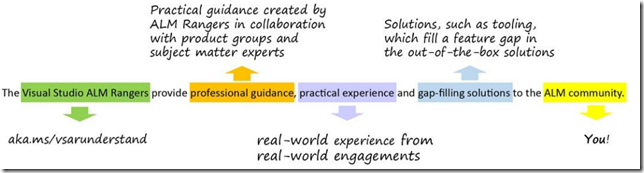

The ALM Rangers leadership has banded together to condense their experience in a new book, Managing Agile Open-Source Software Projects with Microsoft Visual Studio Online (ISBN 9781509300648), by Brian Blackman, Gordon Beeming, Michael Fourie, and Willy-Peter Schaub. And what is even better: it is available as a free ebook download at MSPress.

One picture from the book will suffice to encourage you to take a deeper look into the dynamics of the ALM Rangers and how it has been able to anticipate and complement the Visual Studio team in helping customers of all sizes in getting the best from the ALM tools from Microsoft:

I know all of the authors in person which allows me to say that this book represents just a tip of the iceberg of things they do for the ALM community in general. My question has always been: how do they do it? The book details some of the organizational aspects that through their leadership have been adopted in the large by the Rangers. It should be no surprise that the success of the Rangers program has its roots in principled-leadership, as everything else (Stephen Covey being someone who has summarized what humankind has known for centuries).

I mentioned last week I would come back and talk about some fantastic Build 2015 sessions on DevOps and TFS.

I recommend watching at those seven sessions. If you don’t have the time, here are the ones that are a must-see:

Using Visual Studio, Team Foundation Server, Visual Studio Online, and SonarQube to Understand and Prevent Technical Debt

Technical debt is the set of problems in a development effort that make forward progress on customer value inefficient. Technical debt saps productivity by making code hard to understand, fragile, difficult to validate, and creates unplanned work that blocks progress. SonarQube is the de-facto Open Source tool to manage down technical debt.

I have been working with the Rangers to release some documentation on how to install and configure SonarQube up on a simple scenario, so this talk has been a great complement to what we have been doing.

Managing Cloud Environment and Application Lifecycle Using Azure Tools and Visual Studio Online

The modern way of development requires frequent changes not only to the application itself but also to your environments. Those changes demand a set of tools that make those tasks shareable, configurable and repeatable. In this session we’ll talk you through how to use Visual Studio tools for Azure to build and author Azure environments as well as test your applications in those environments. We will also show you how to take advantage of Visual Studio Online and Release Management for Visual Studio to automate the entire process and push environmental and application changes through a staged environment.

This presentation by Claude Remillard, one of the program managers for Release Management, which to me indicates that some of these tools are also coming to TFS on premises. More than that, you can see how the Ops side of ALM is now being fulfilled in the long term view Microsoft has for ALM.

Last week at Build the Visual Studio team had some fantastic sessions on DevOps and TFS. I might come back and comment on a couple ones that really caught my attention.

The most exciting thing though was the announcement of TFS Release Candidate, which you can get here. I could go on to talk about features but it would be better for you to ready from the horse’s mouth at Brian Harry’s blog. There were so many new things to check out that I had to read the post twice to make sure I was not missing anything. My favorite two topics were Release Validation, one of the last miles od DevOps, and Agile project management (many long sought after improvements in the UX area).

A quick installation went without any issues. I will be testing a couple of upgrades in the next few weeks. We are not planning to use the “go live” option, but it seems stable enough to do it. It’s looking good!

In the last couple of months I have been working with the ALM Rangers on investigating how to deal with Technical Debt, and particularly how to use SonarQube with existing TFS 2013 environments.

The current result of this research is a short but to-the-point guide on how to do a SonarQube installation for an existing team foundation server 2013 single server environment. You can get it from Codeplex at http://vsarguidance.codeplex.com/releases/view/614602.

Being proactive in adapting SonarQube to be used with TFS and VSO is yet another example of Microsoft’s change of positioning, after achieving one of the leadership positions in the Gartner report, to become an even more open ALM platform which now allows seamless cross-platform development, including all kinds of mobile applications.

Update: This guide has been moved to github.

I mentioned last January about Scrum Day in Dallas in March 27th. So the meeting happened and we have a few slide deck PDFs for your reference:

- Keynote by Ken Schwaber: Scaled Scrum - Download

- Practical Scaling - Download

- The New New Product Owner – Download

- Team Performance: The Impact of Clean Code & CD - Download

This last session was nice and typical of Scrum.org: most trainers are also developers and highly technical, with emphasis on what Agile teams look for.

As for the Face to Face meetings, I will go over some of the content in another post.

Scrum.org has been working for a while on expanding and bringing more structure to the original ideas on how to scale Scrum brought forth in the book Agile Project Management with Scrum. Now it has launched a Scaled Professional Scrum course and Scrum Practitioner Open Assessment.

The Scaled Professional Scrum course covers scaling agile concepts and Nexus in particular: “Nexus™ Framework and approximately 40 practices which cause the Nexus and your scaling initiative to operate predictably.”

The Scrum Practitioner Open assessment complements the other many provides assessments and allows you to benchmark your ability to participate in a Scrum team, with focus on multiple teams engaged in a scaled development initiative.

Here is a nice interview with Ken Schwaber at InfoQ: http://www.infoq.com/news/2015/03/assessment-program.

I will be coming back to this topic with in-depth analysis and how it compares with other frameworks. For the moment, let us just say congratulations to all who have contributed to the effort.